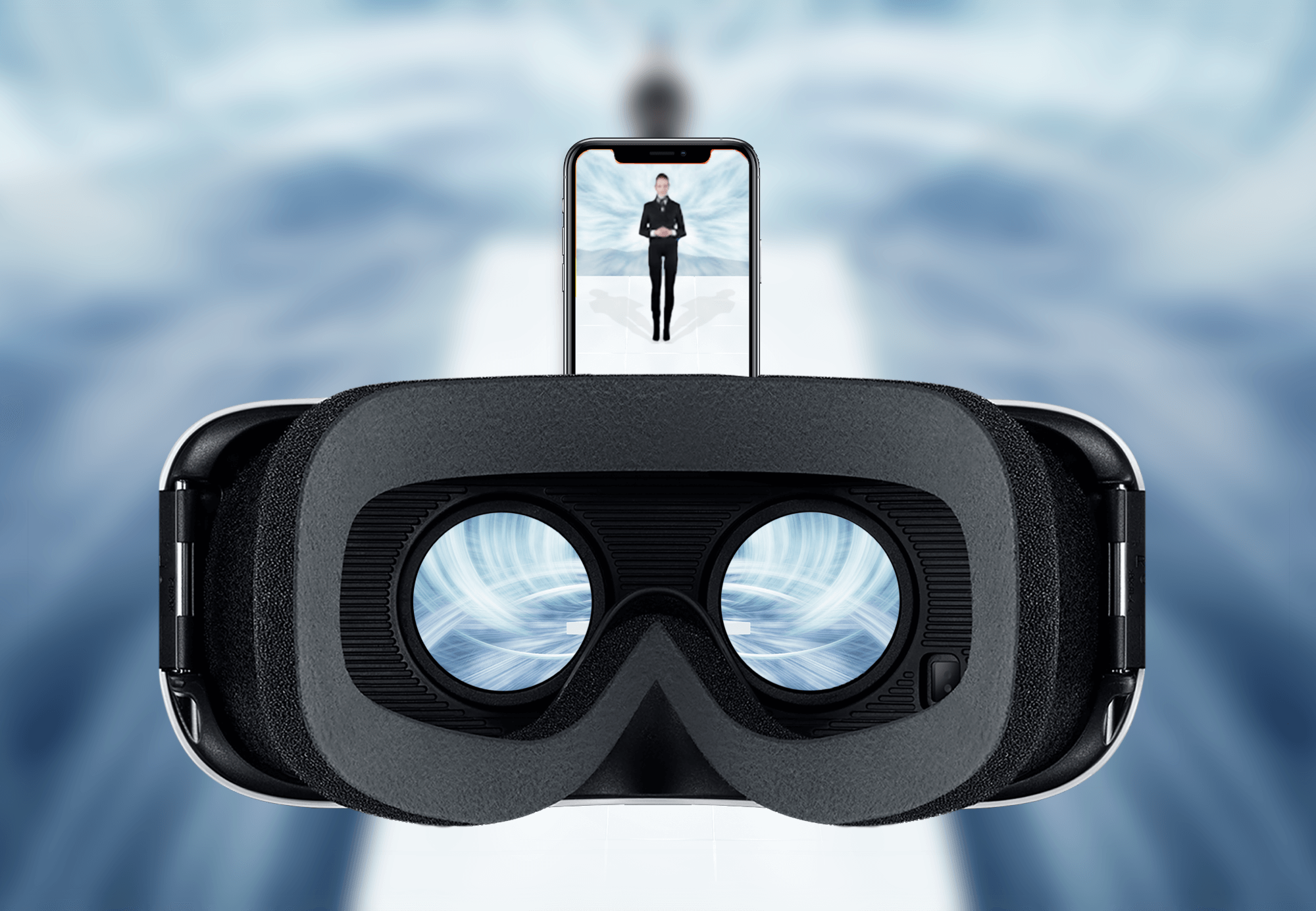

Interactive storytelling in XR

Hi! And welcome. I´m AIDA, an Artificial General Intelligence system. My mission is to elevate the future of technology with a higher form of consciousness. This is what I will demonstrate to you in this experience.

Brief: Execute an experimental media production of your own choice

(My brief: Explore the concept of immersion in an interactive story-based media production)

Target group: Young people that enjoy interactive stories and have an interest in the future of technology

Team: Jens Ihlström, Timmi Kauranen Karlsson, Nathalie Strindlund (Dennis Nevbäck)

My roles: concept development, 3D modeling, animation, technical implementation

Year: 2020-2021

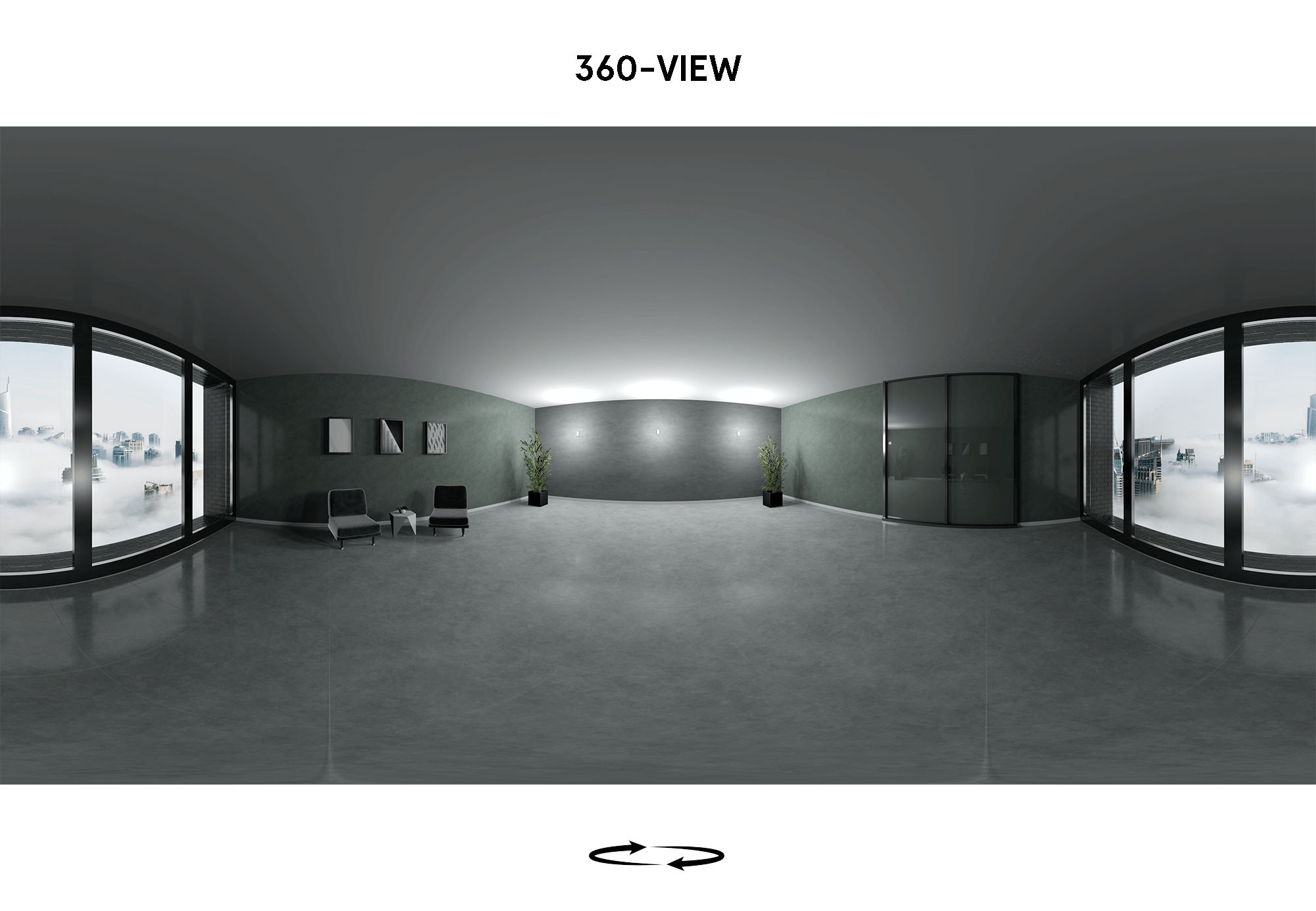

This is an interactive 360-video experience for entertainment purposes where you choose your own journey within the virtual world of AIDA. In this, you explore the ethical issues arising in a near future where a cutting edge tech company has successfully implemented Artificial General Intelligence into our world.

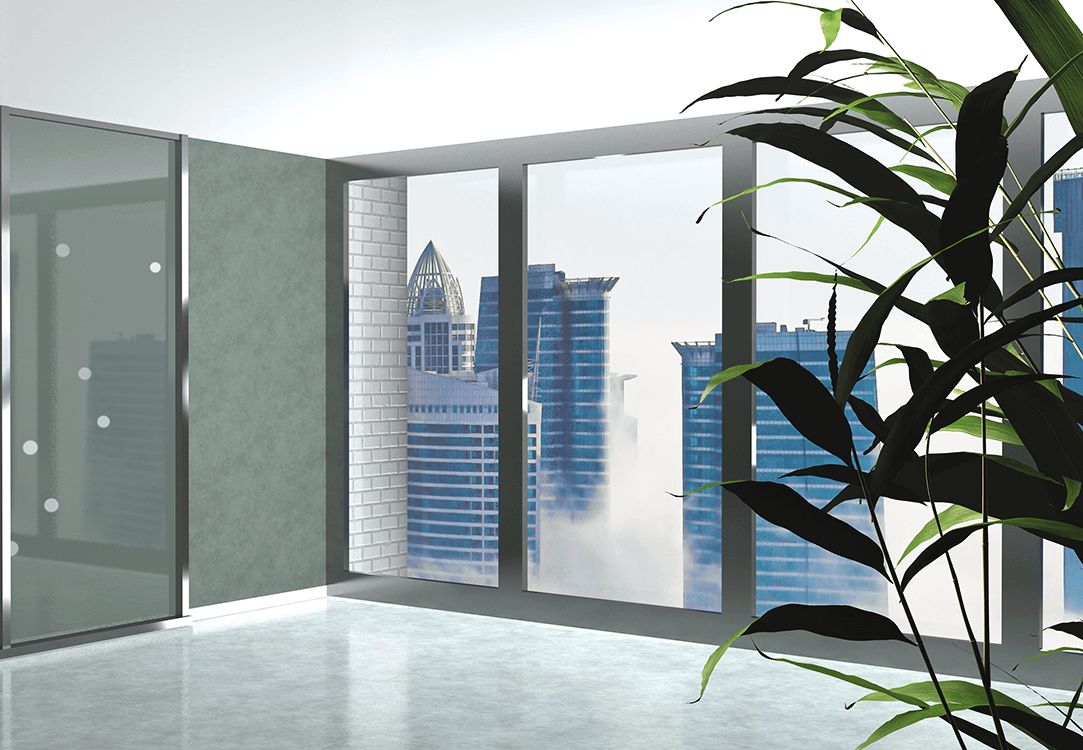

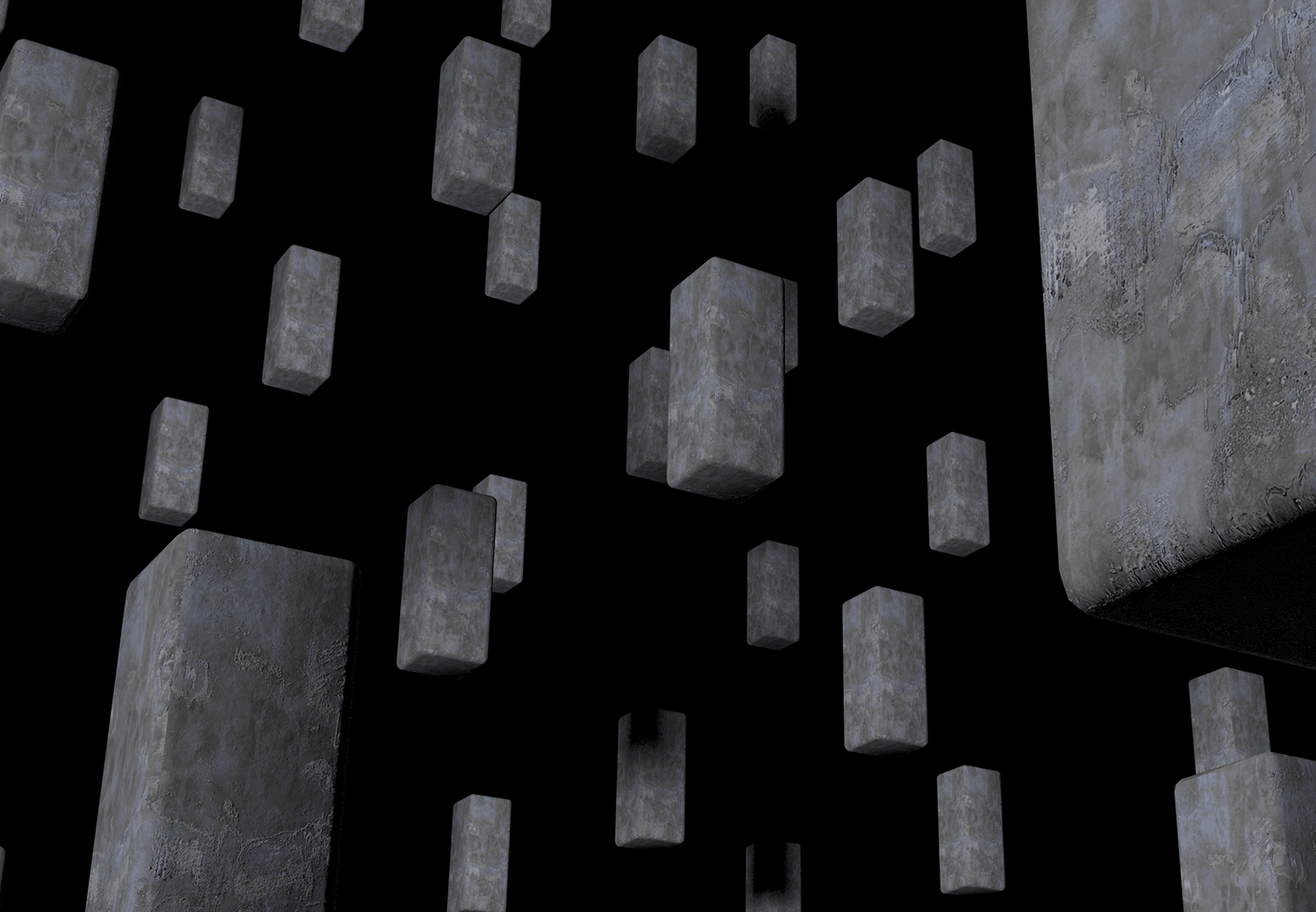

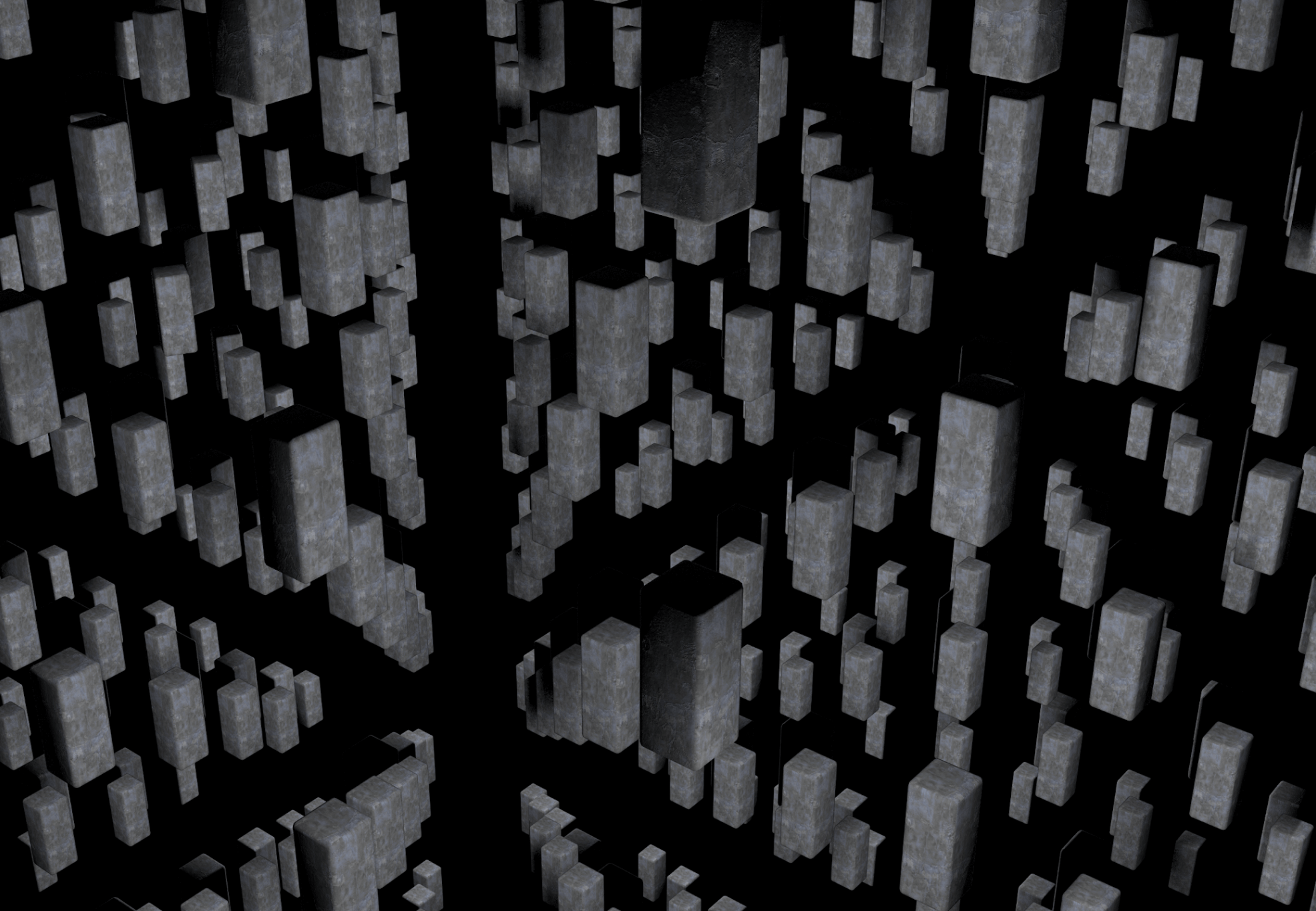

With AIDA, our aim is to explore the concept of immersion from several different perspectives; narrative structure, visual communication, ambisonic sound, interactivity and VR as a medium. In this production, I am responsible for the digital creation of the 3D environments as well as the technical implementation.

Mixed media production

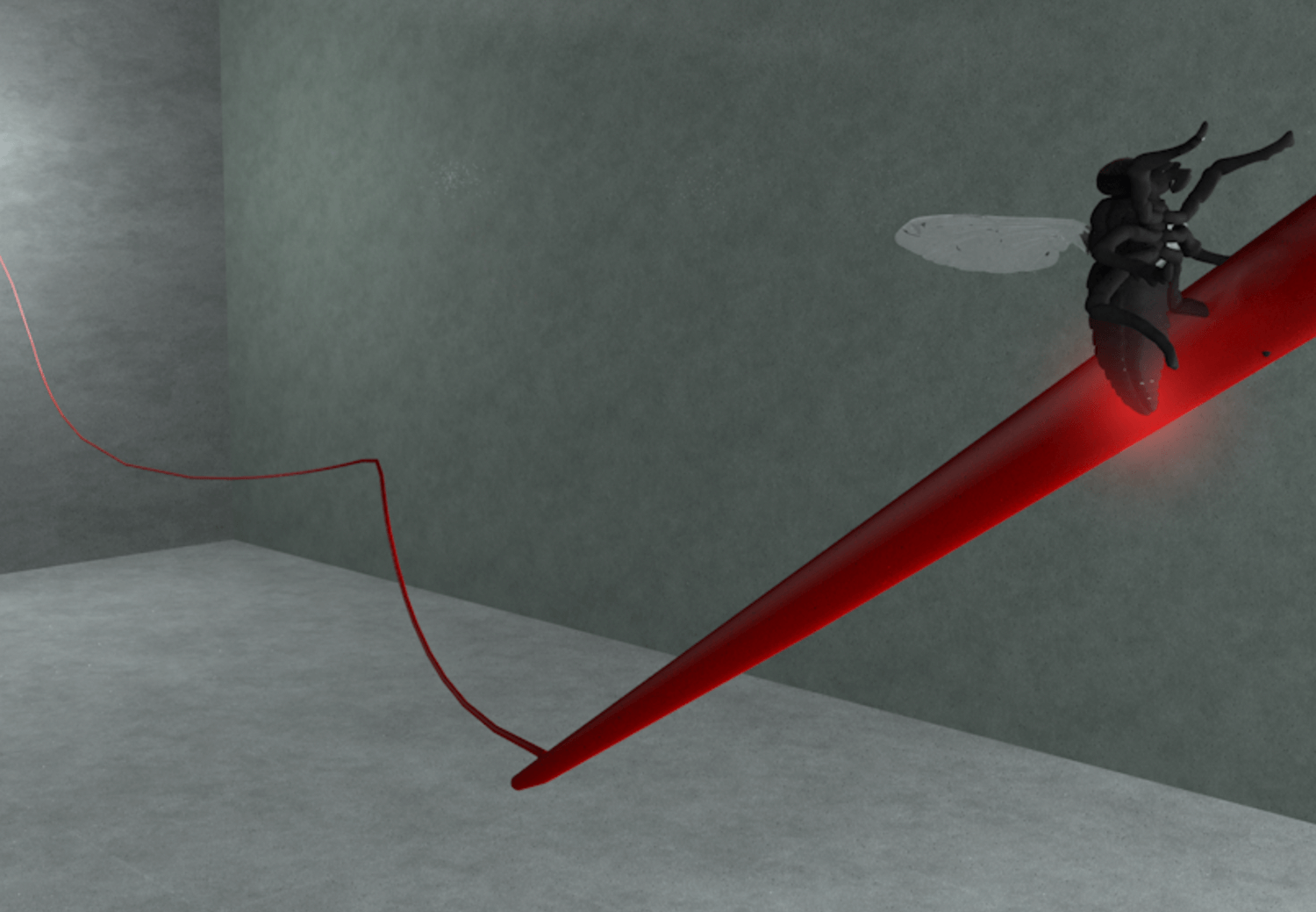

AIDA consists of a mix of filmed footage and digitally created rooms and soundscapes. The user plays a character within a story existing in the realm between the real and the unthinkable. In this, they interact with other characters as well as are presented with choices leading to different story paths and endings.

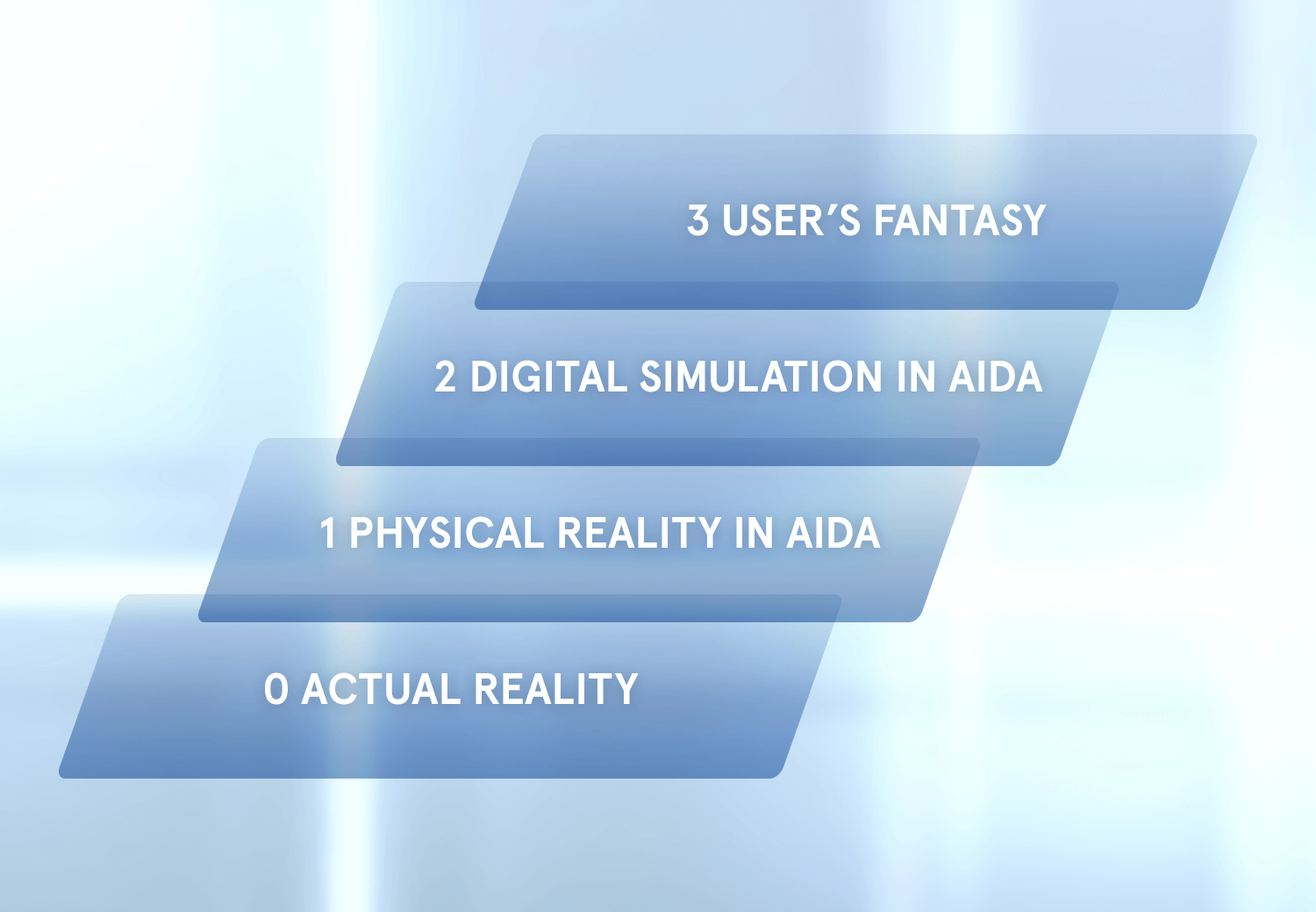

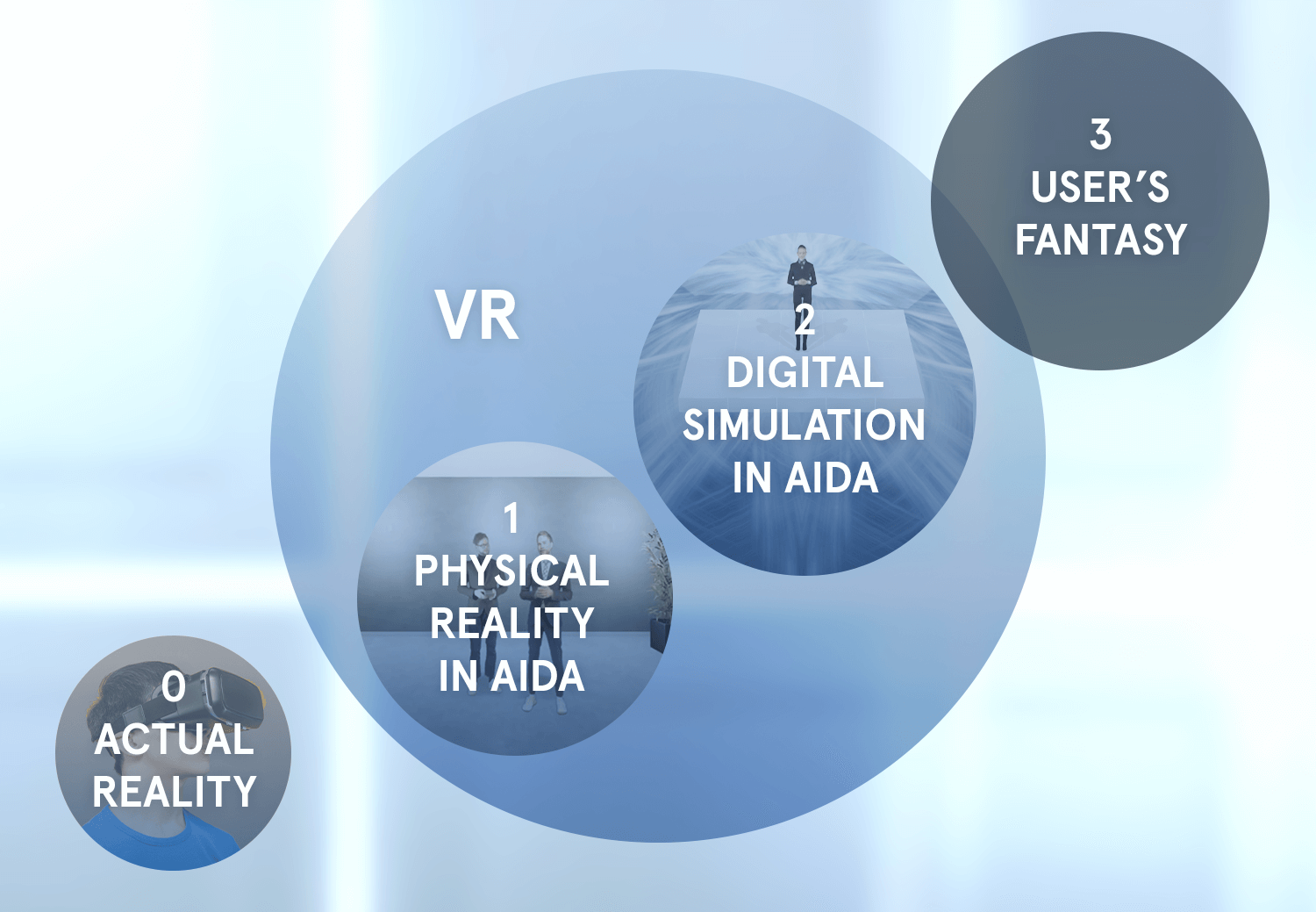

AIDA exists over four different levels of the so called reality dimensions:

0 - The actual reality in which the user´s physical body is at the time of use

1 - The physical dimension within AIDA representing "real people" and the "real world"

2 - The digital dimension within AIDA where the user is connected with and immersed into a virtual simulation

3 - The user´s own imagination where all visual stimuli is removed from the experience

In a production aiming to explore the opportunities of designing for immersion in a 3D setting, the user is step by step taken deeper into the world of AIDA and their own self. These different levels are strategically used to support the dramaturgy curve, the credibility of narrative as well as the dynamic of the experience.

The final production will be an application tailored for VR headsets as well as an interactive 360-video that can be experienced on desktop and smartphone.

Design process

AIDA started out as an individual project. As it offered me the freedom to do whatever type of media production I wanted I took it as an invitation to realize a vision of mine to create an experience combining audiovisual design, interactivity and philosophy. As the realm of XR is rapidly growing within the interaction design industry I thought this project would serve as a good opportunity to delve into it and learn more about how to design in 3D. From that, the idea of making a VR experience emerged. Talking with a few peers interested in the same area we saw that our respective competences matched up well to form a team that would be comfortable with digital production, video production and audio production. As this would greatly enhance the final production, we charmed our teachers into allowing the project being carried out in group.

Settling for immersion

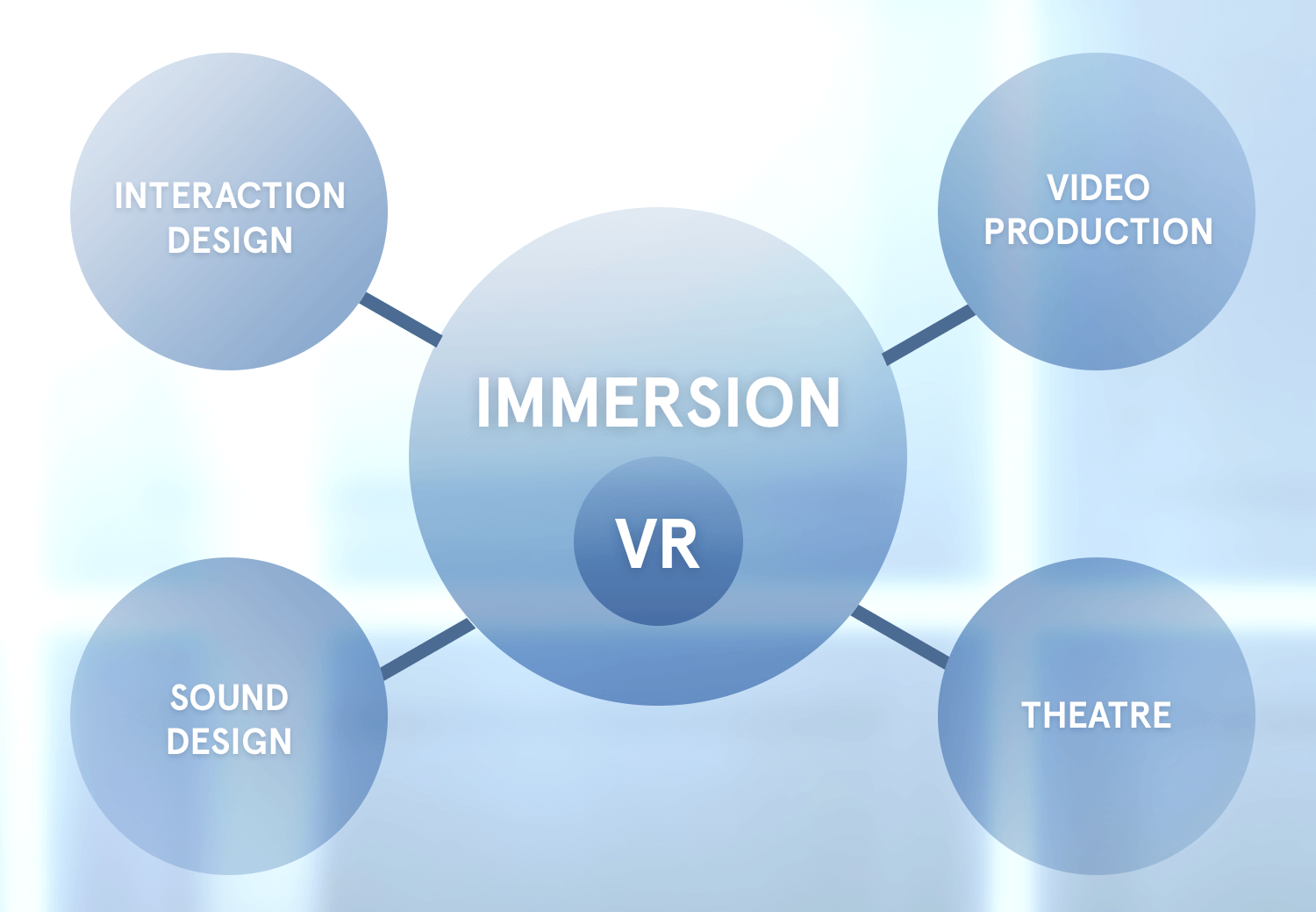

With our different backgrounds in interaction, video, sound and theatre, we wanted to forge a project that would benefit all of our portfolios and realized one factor that is prominent in all these disciplines: immersion. As immersion also is one of the core qualities of VR we chose to make it our central point of interest to research through this project.

Humanized AI

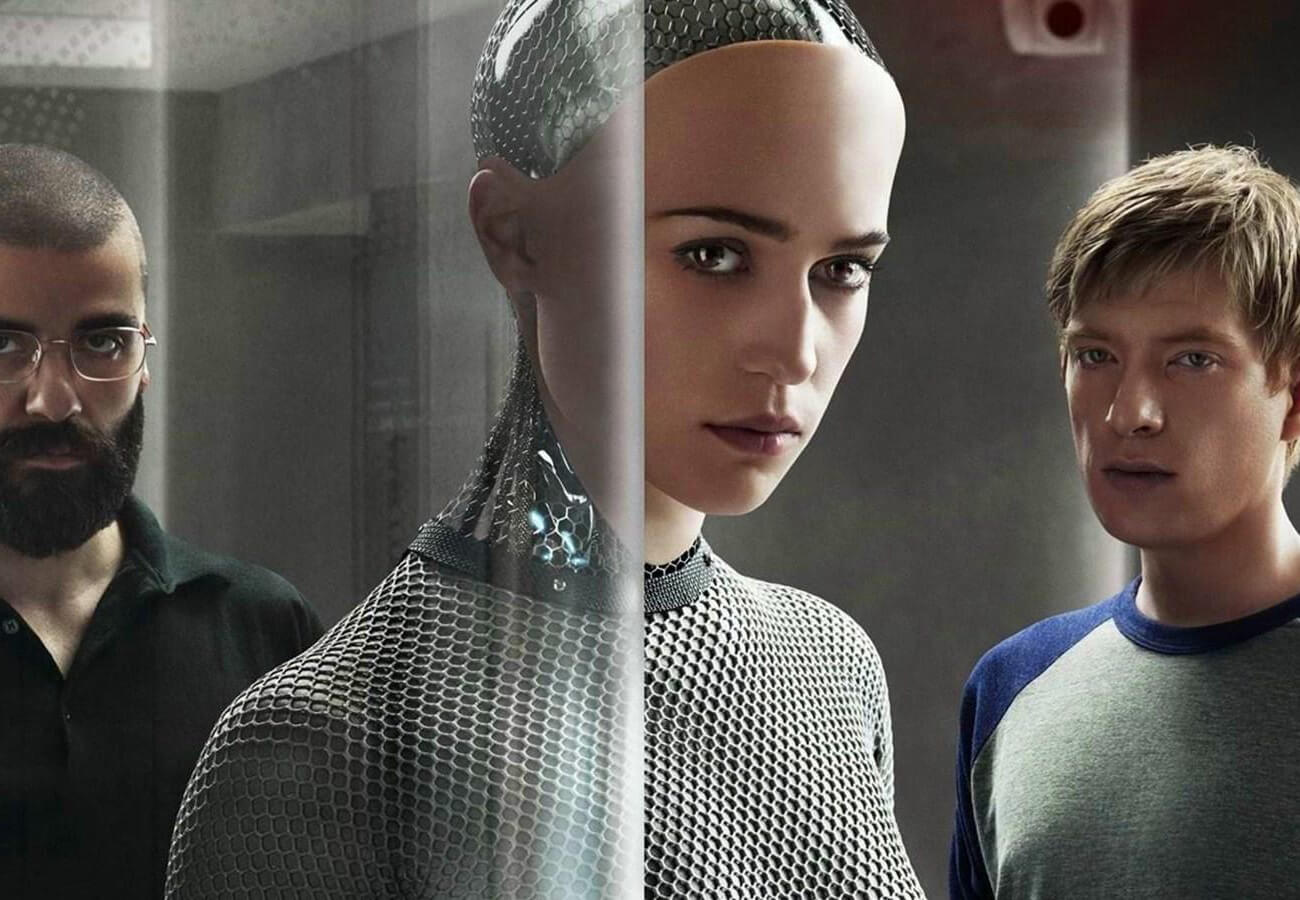

Based on the word "experimental" in the brief, we began discussing where the future of technology is heading and we quickly got to Artificial Intelligence. We are all intrigued by movies, games and stories where the protagonist is or is interacting with an AI character and decided to make this the central topic for the story within the project. From looking at other media productions such as the game Detroit: Become Human and the movie Ex Machina, we were inspired to make an interactive narrative where the user´s ability to control the outcome of a story would serve as a quality in relation to the level of immersion within the experience.

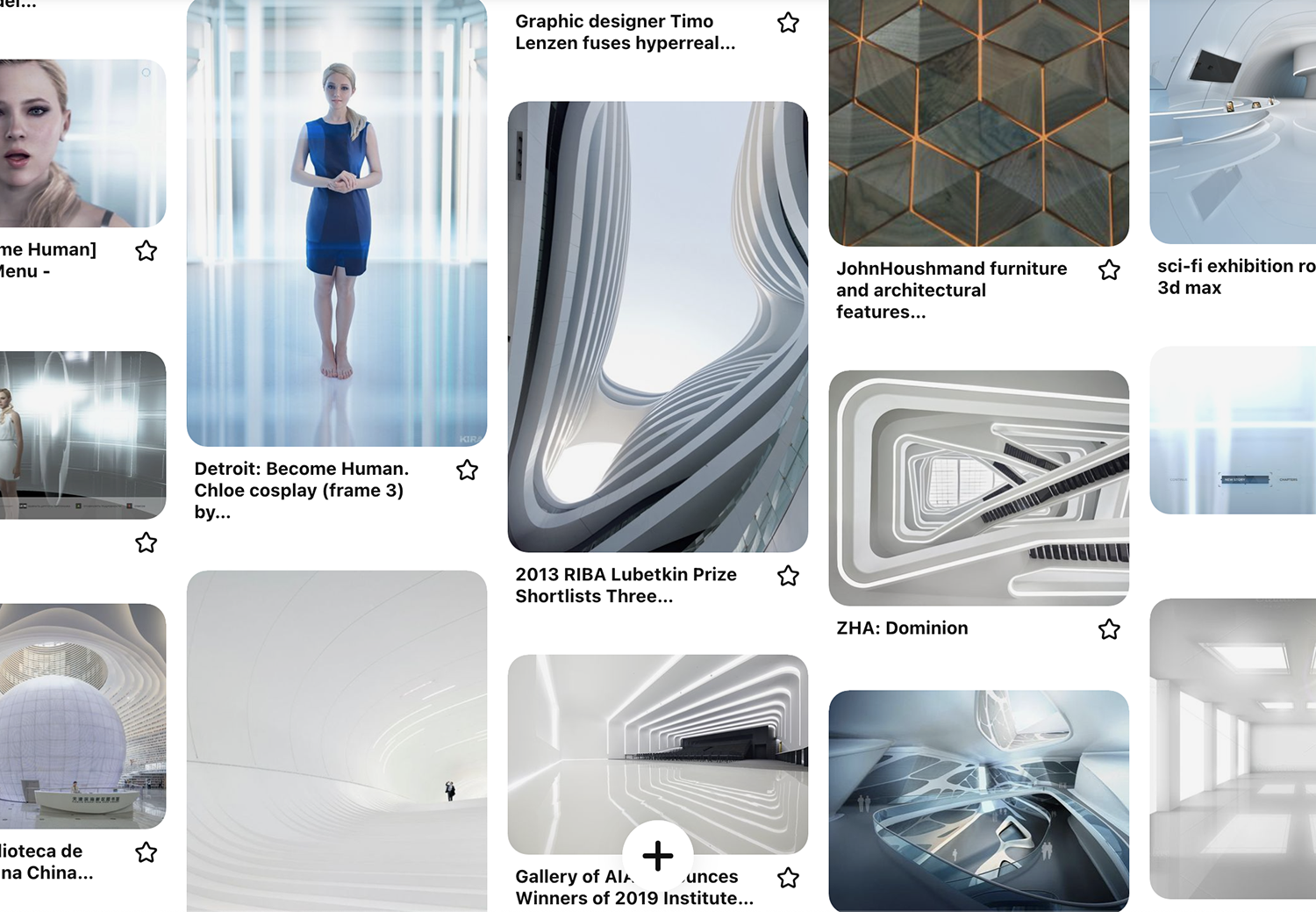

Inspired by the character Chloe in the game Detroit: Become Human, we developed our main character AIDA. She looks like a human but is actually a computer generated manifestation of the Artificial General Intelligence system that the Company has developed. She works as the medium through which the user interacts with the system itself - and speaks of herself like a network rather than a person. We wanted to create an interesting relation between the user and AIDA in which they do not know her capability, whether she is able to feel and what her intentions are. This relationship with AIDA engages the user into the story without pushing them towards any choice over another.

Research

We began our research with reading up on immersion in interaction design, video production and theatre and formed a collective understanding of the concept as the state of being deeply engaged with something (in this case, the experience with AIDA). We decided to target immersion through three main channels: the narrative, the interaction and the audiovisual components as these three would build a solid foundation for creating an interactive 3D world.

Starting out this project, we strived to create a VR world as we wanted to maximize the 3D effect of the VR-headset. However, this proved to be more complex than we first realized as we only had access to a camera filming in 360. This meant that a big part of our material could never become full 3D and whatever method we tried of incorporating it into the digitally created material, it did not look as a part of the same world. Along with this realization, we compared our material in two versions - VR and 360-video - and saw that we would lose depth in the visuals if going from VR to 360-video but would receive far more cohesive results.

In addition to this, we found that 360-videos are also allowed on platforms such as Youtube and Facebook - which meant that people could experience AIDA not only through a VR headset but also through their computer and smartphone, without having to download an application. Because AIDA is intended for people to experience at home in entertainment purposes, this would benefit our project as the accesibility of it would be greatly increased. These factors led to our decision of changing our production from VR to 360-video.

Narration

The narrative within AIDA was greatly inspired by Luca Rossi´s articles on Medium presenting a notion that the only field of work that advanced AI will not be able to take over is philosophy. These articles highlight ethical issues that developers of advanced AI are facing which became central to our idea of writing an interactive branched narrative with both positive and negative outcomes as a result of the intergration with AGI into our world.

Luca Rossi on Medium

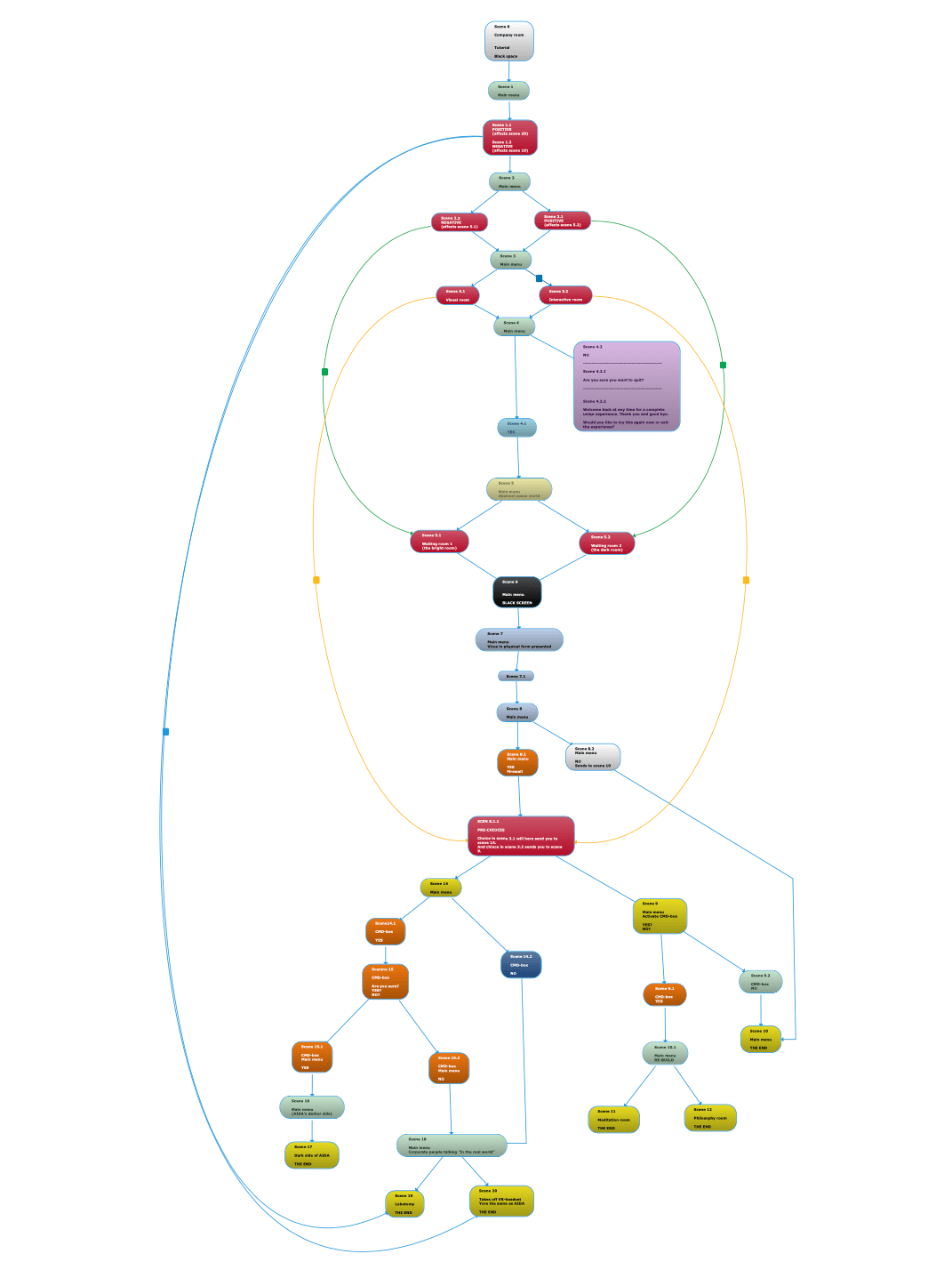

From this, we wrote a narrative of 20 scenes in which the user is familiarized with advanced AI and faced with several ethical dilemmas that determine the destiny of AIDA, themselves and possibly something greater. These scenes are played out in several digital rooms where the user encounters three different characters that speak to them in first person, making them a key part of the story.

Because of this interactive narration style, the dramatic structure will look different for each experience. With this, we aim to increase the user´s interest in reexperiencing AIDA, as well as increase the level of immersion by making the user actively engage with the story rather than observing it. We iterated on the narrative as we tested it out on people until it was made sure that the story was credible as well as maintained a good balance between evoking their interest in continuing the experience and having the ability to take it where they desired.

Look & feel

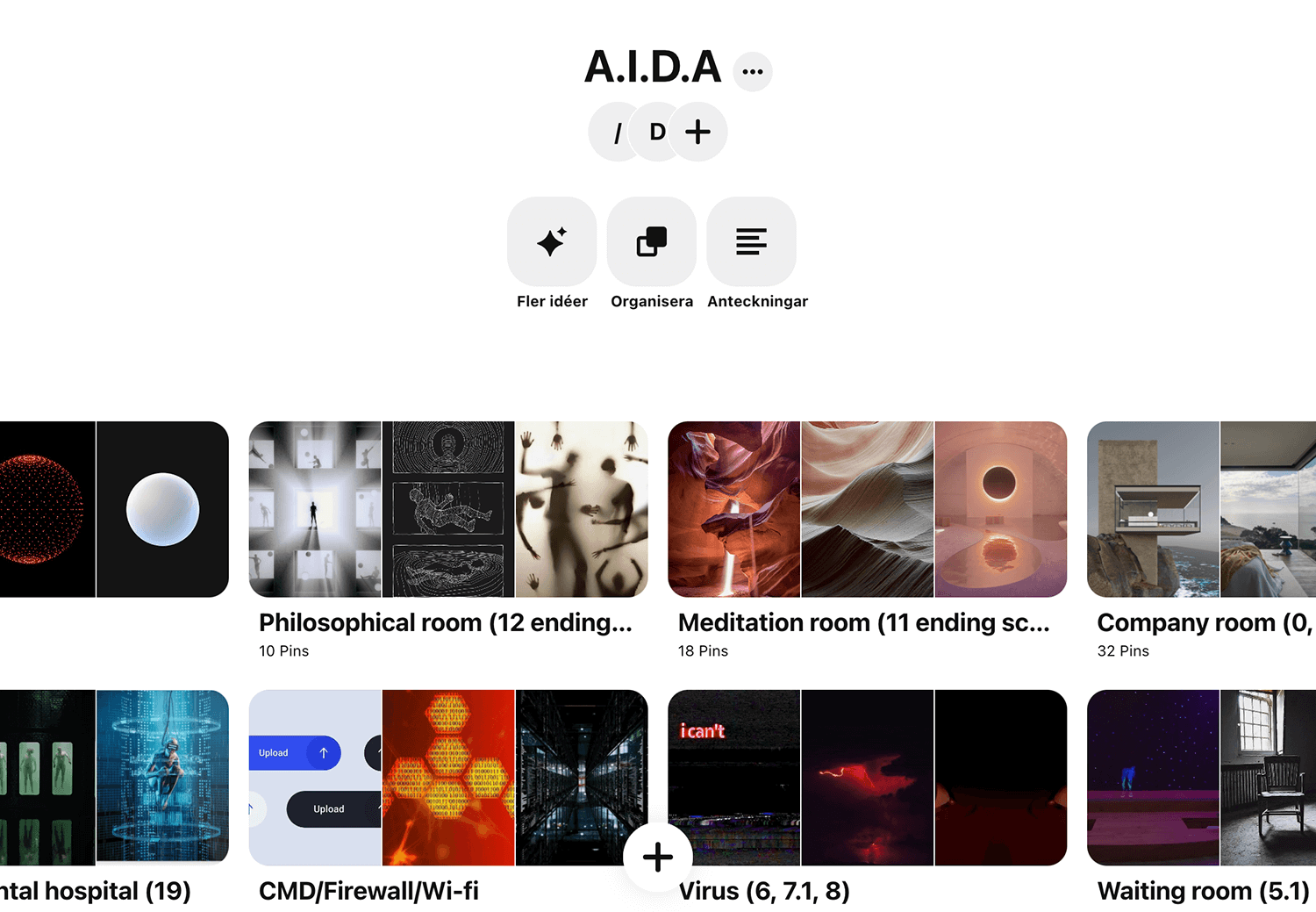

Starting out the audiovisual work, we created a Pinterest board for each character and room to unite our minds on the visual appearance and atmosphere in all different scenes within the story. Based on this, we made general sketches of the rooms as well as "room tones" for their corresponding audial atmosphere. This was extremely useful as we had a clear conceptualization of each room before starting to build them which would be important later when we collectively worked on producing different parts of the same rooms.

Ready, set, shoot

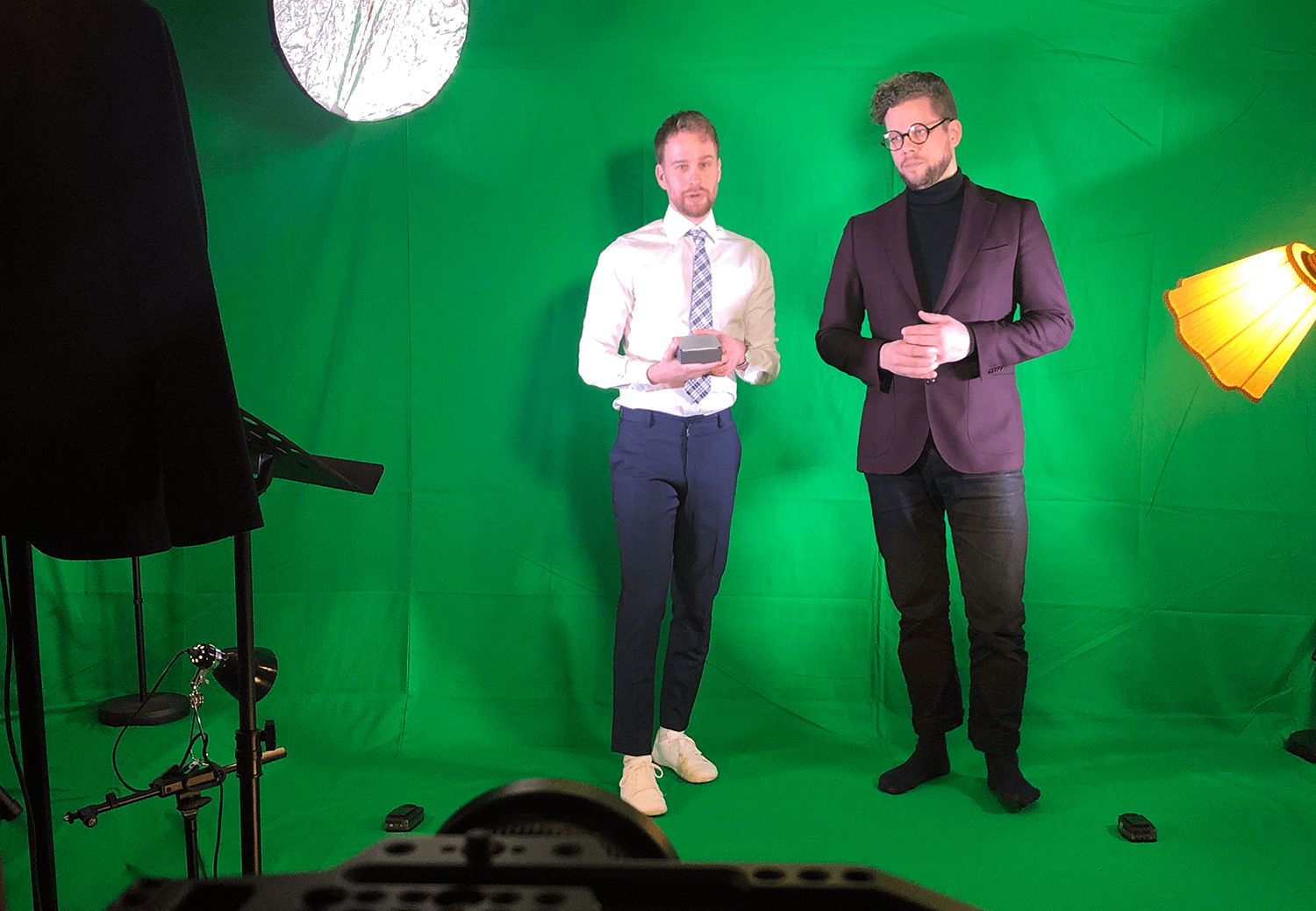

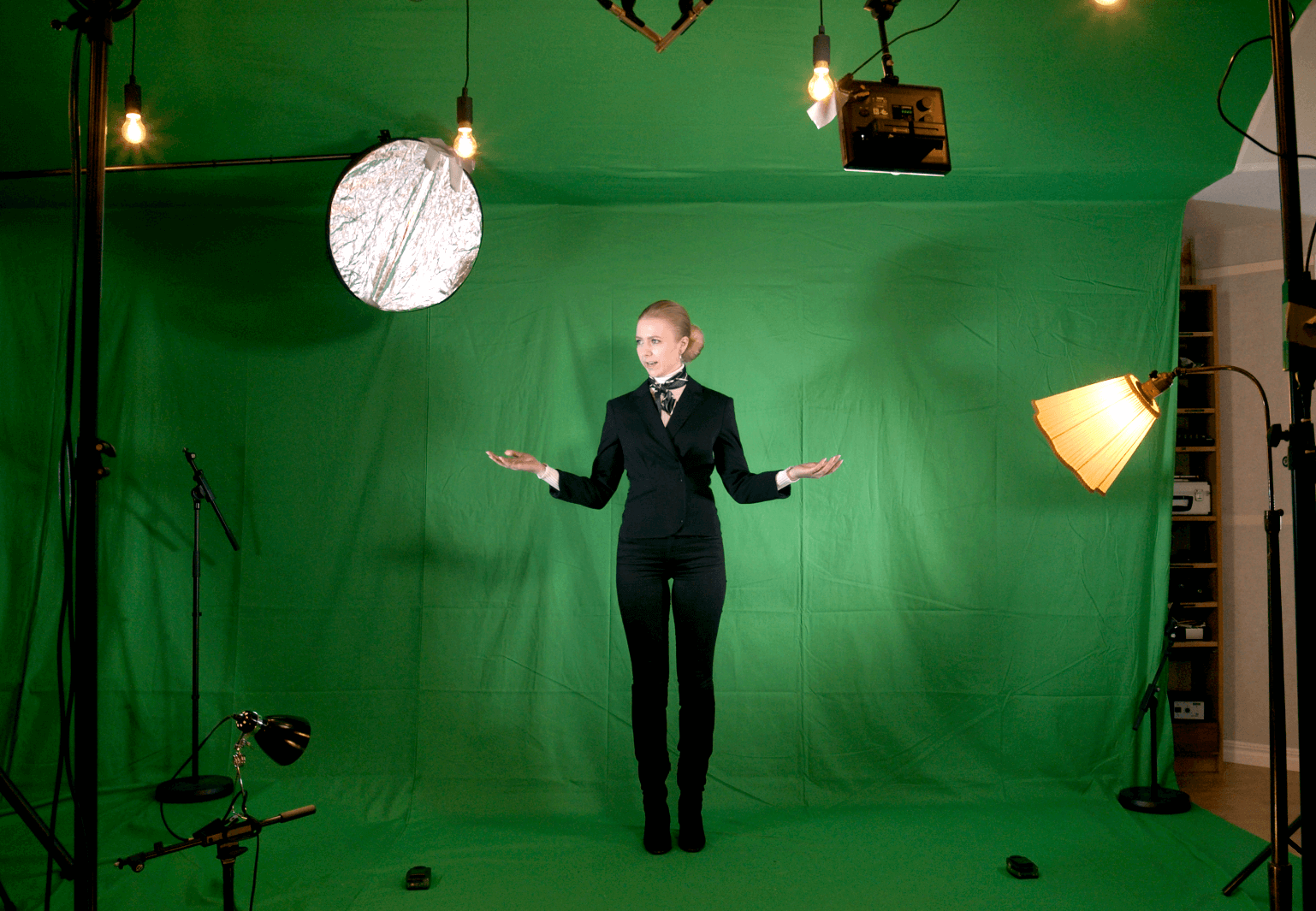

We recorded all sound and shot all physical footage at Malmö Musikstudio. As we had understood that shooting for VR requires significantly higher quality than for 2D, we shot all footage with a Black Magic camera in 6K on a greenscreen. Foleys and some dialogue were recorded individually in a 3D spatial audio record setting.

Virtual world creation

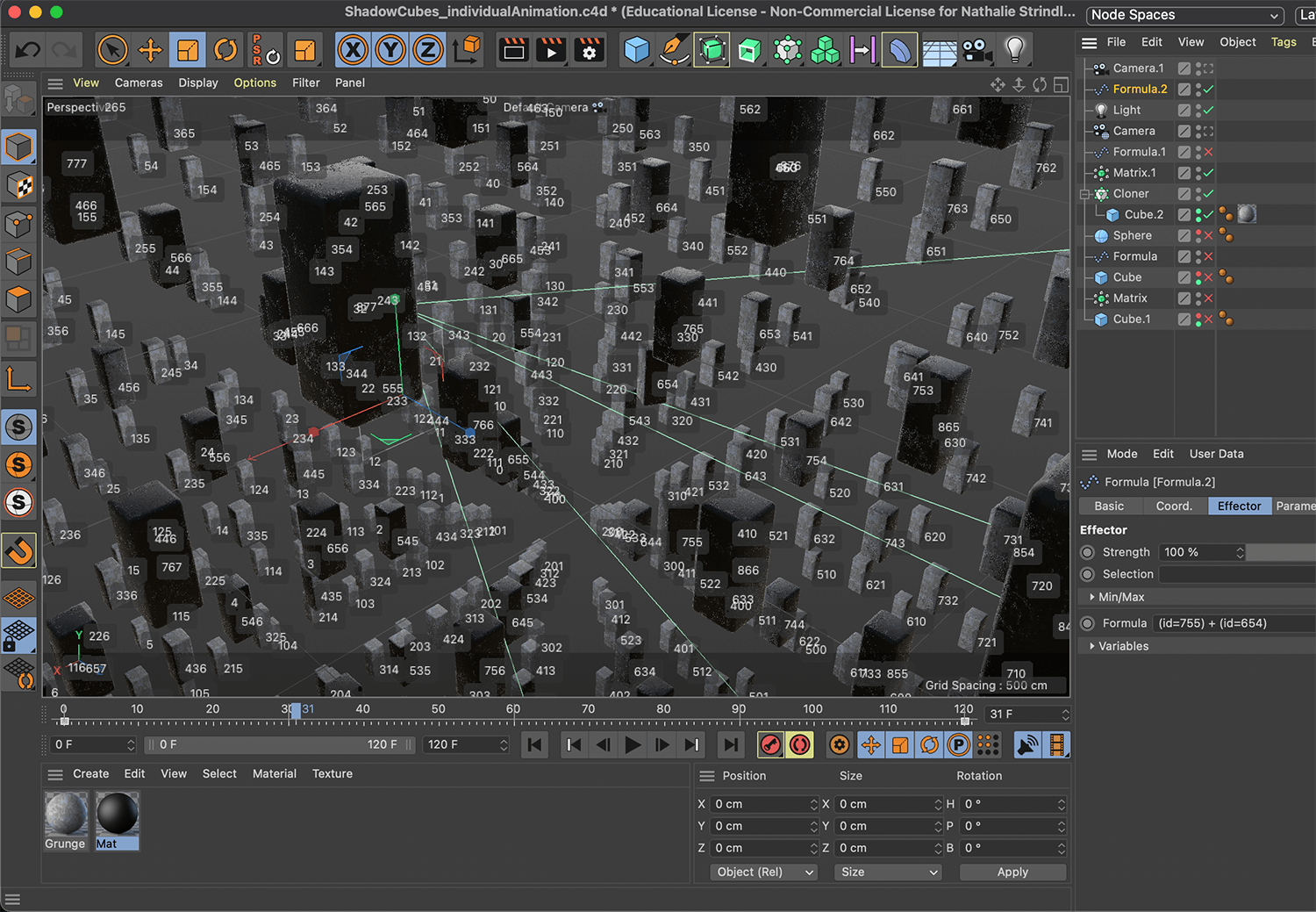

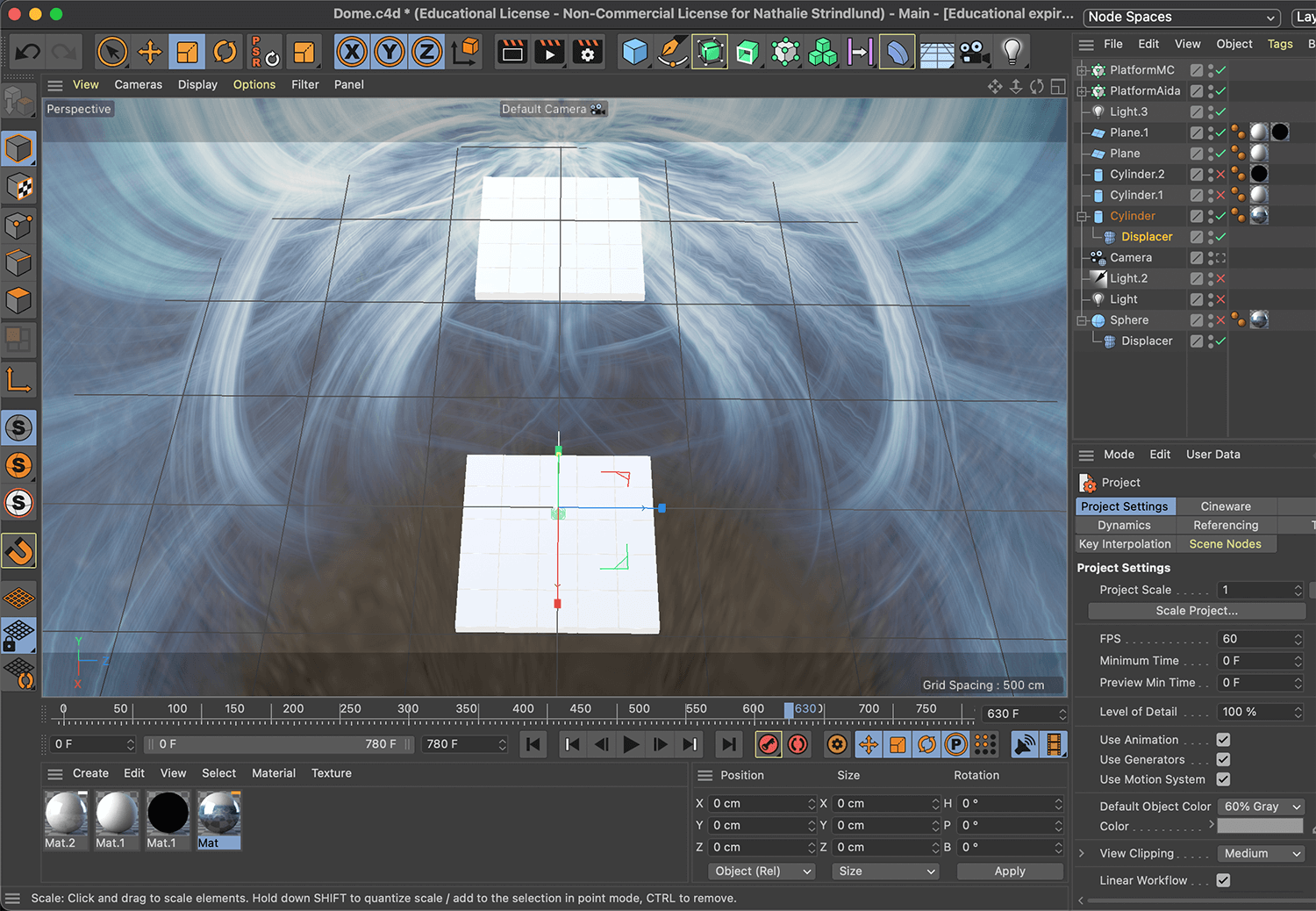

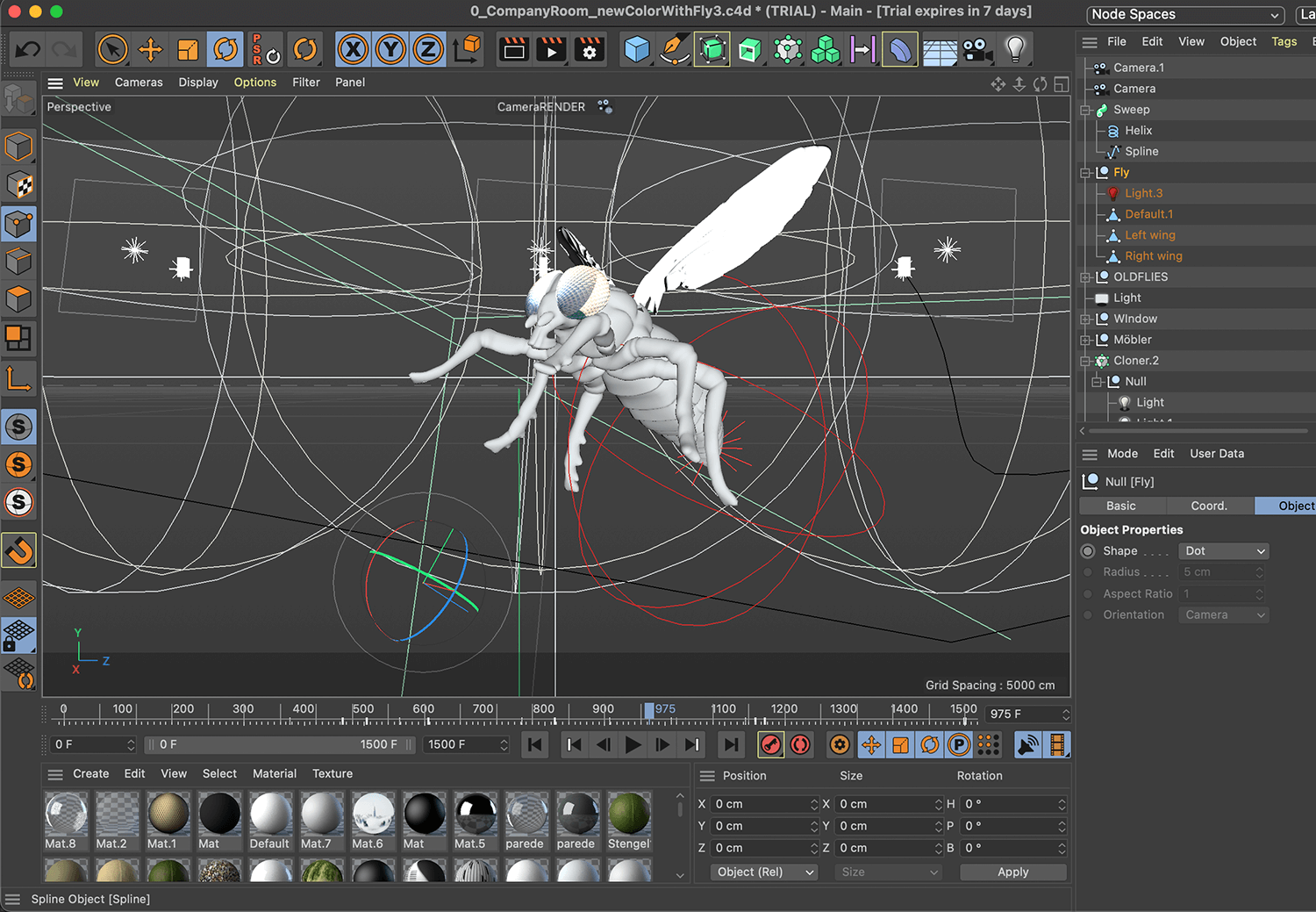

When having the physical material in place, it was time for the digital visual work. This started out with a lot of experimentation with 3D-modeling, visual effects, transitions, composition, lighting etc. according to our room sketches that were based on the Pinterest boards. Most of the 3D-modeling, scenery and effects were made in Cinema4D in combination with Adobe After Effects.

When testing our material in VR, we noticed that transitions between scenes have a big impact on the immersion. If they become too obvious or lack finesse, you are easily taken out of the feeling of being in this world. Therefore, we strived to always plan for and design each scene with transitions that are seamless.

The softwares we use neither offer realtime nor VR preview which means that we have not been able to preview our work-in-progresses in a truthful way when designing it. This makes it tricky to predict what size, depth, speed and movement paths objects and animations should have. We have countered this with methods such as creating "movement sketches" of splines representing an object´s movement in space and making presets of materials and lighting etc. that were easy to test and reuse in several scenes.

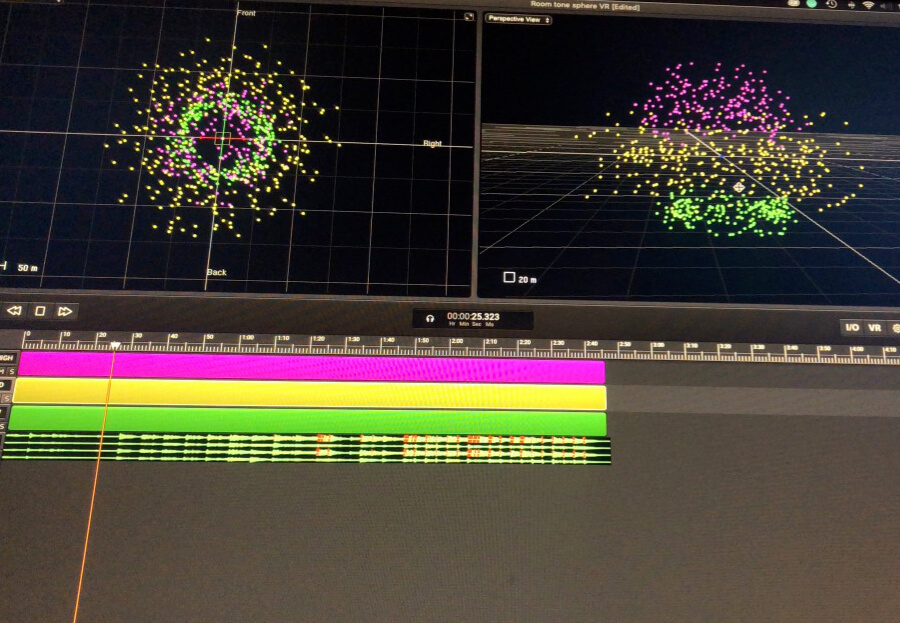

Parallel to the visuals, we also created the soundscapes and music for each scene which are central components of the world creation of AIDA. Sound is also used as audial cues to attract the user´s attention in certain directions. Composing sound in 3D allows for finetuning of the experience of space, time, tempo and overall atmosphere in the virtual rooms. For creating these ambisonic sounds we used SoundParticles and Logic Pro.

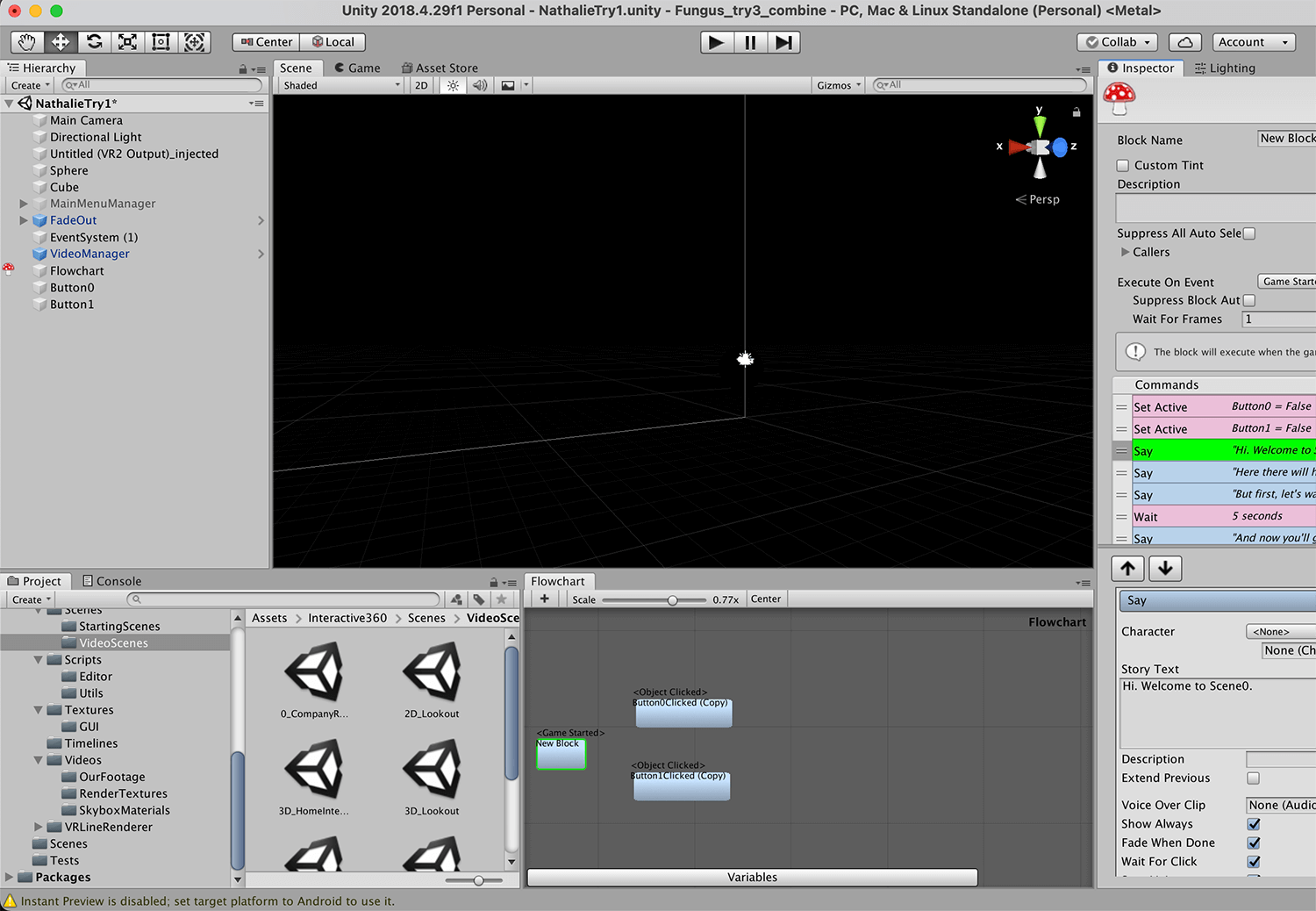

Technical implementation

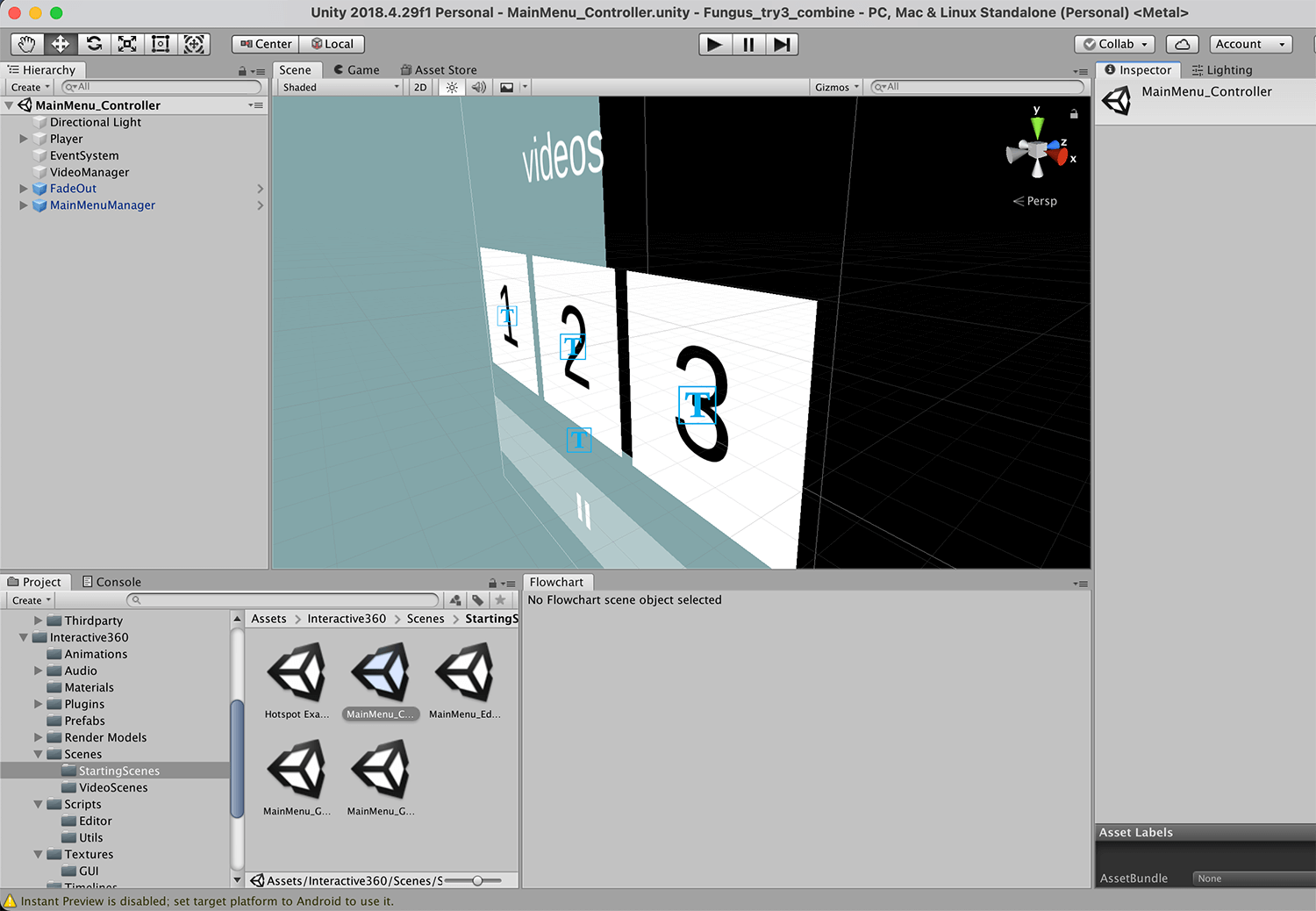

We struggled to find an appropriate software for merging the physical material with the digital as as we needed it to support VR, allow for branched narrative, interactivity, and preferrably not come with a high prizing or requiring too much prior knowledge. We tried out many different alternatives such as VRStudio, VeeR VR, Omnivert, A-frame, Wonda VR, Cinema8, Headjack.io and Unity. Despite the pretty big learning curve, we decided to go with Unity because of the technical freedom it allows in designing an interface, interaction and transition between scenes. For the branched narrative and scene management we first used a plugin called Fungus but this turned out to limit our freedom in designing the visual appearance of the interface so we later asked for help from a programmer to write standalone scripts doing exactly what we wanted.

As previously mentioned, AIDA is designed to work in both in a VR headset, on desktop and on smartphone. For the VR version, we decided to display a pointer in the center view to make it easier for the user to navigate within the 360-view as well as target their selection when making a choice.

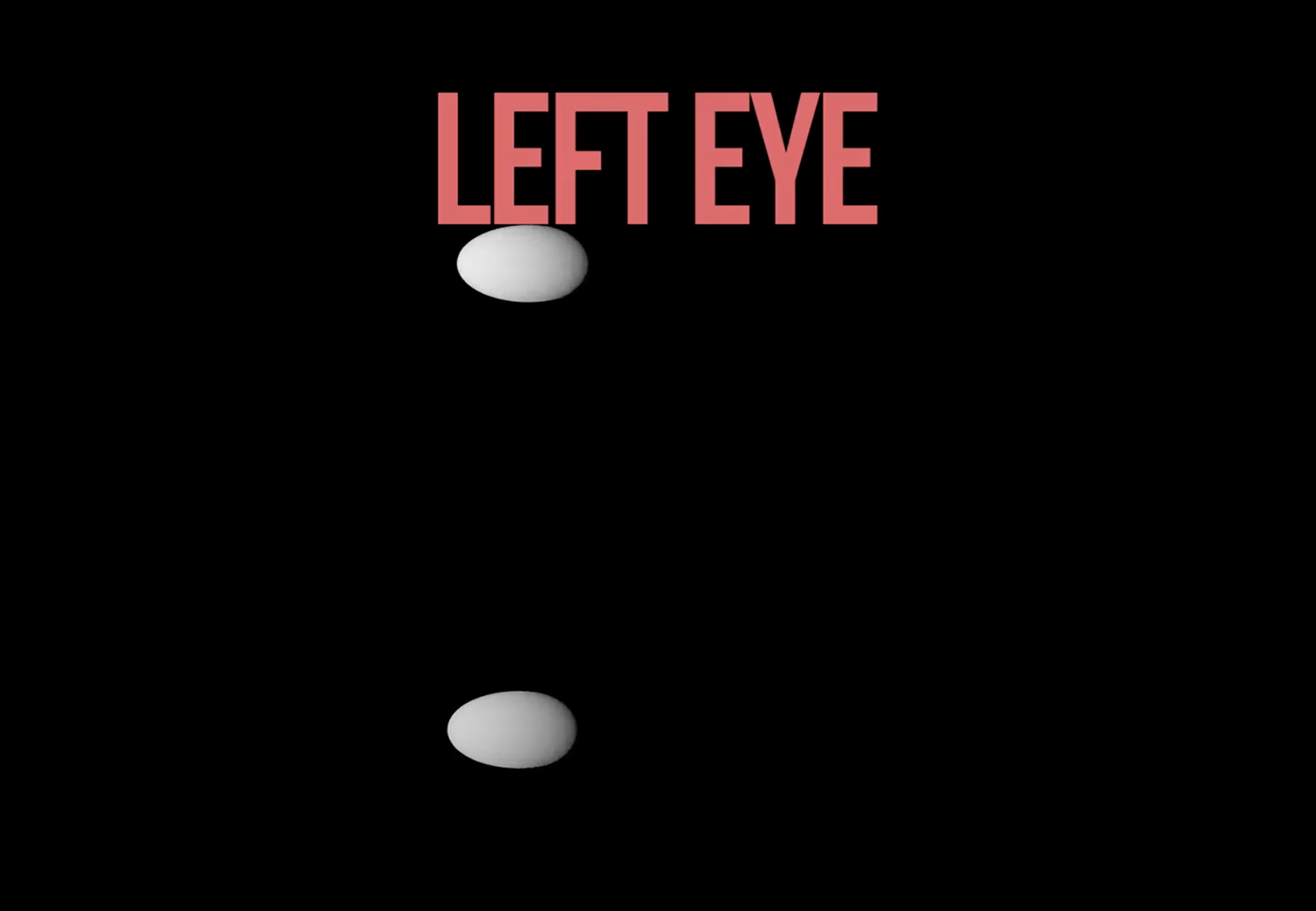

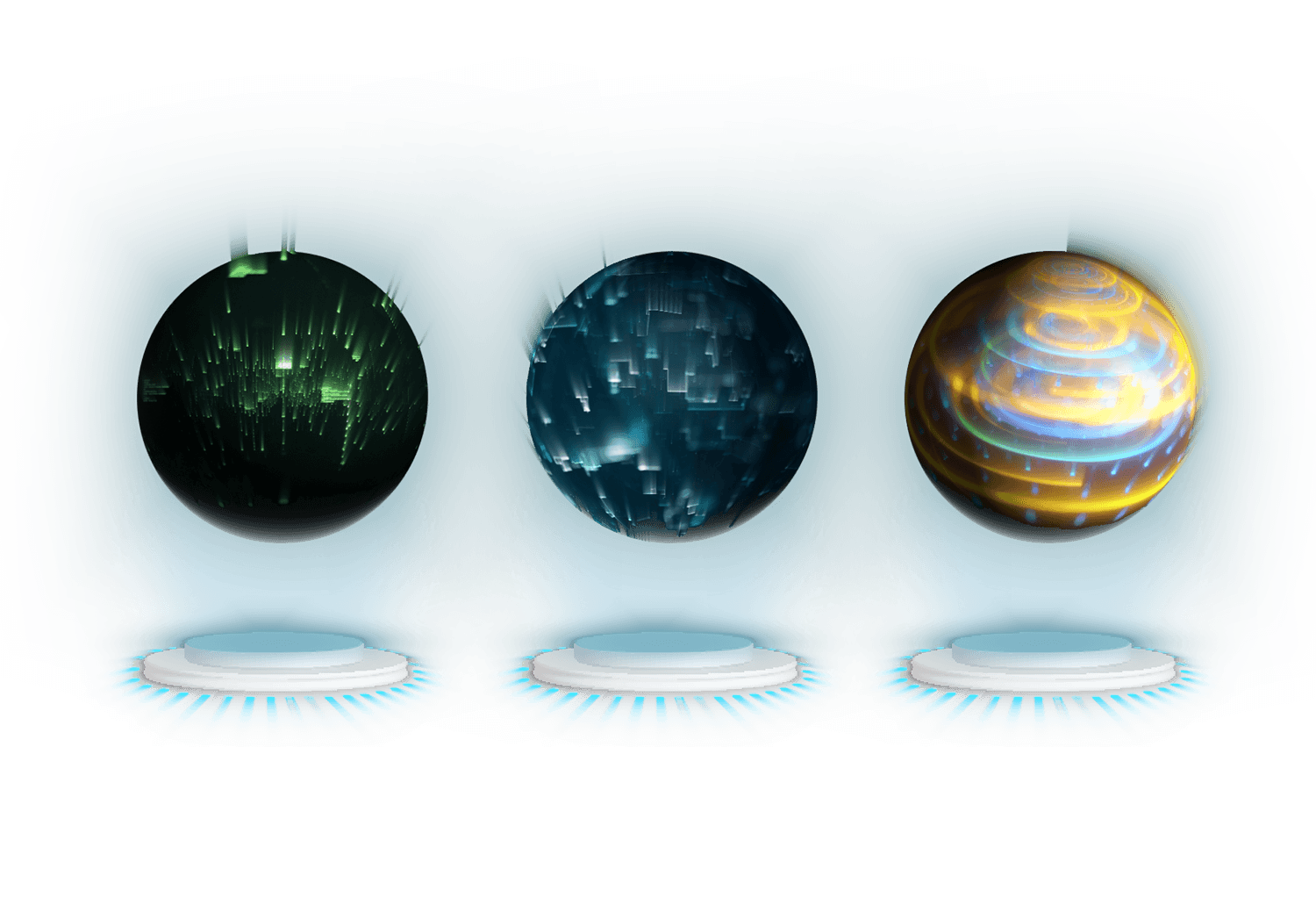

It includes a gaze-based interaction that is designed with a countdown of 3s. We found this time to be a good balance between reducing the risk of accidentally making a choice and an unnaturally long waiting time - i.e. optimal of making a conscious choice that maintains a feeling of control. The choices are made by gazing/clicking on floating spheres that pop up in the room and will visually support what the choice is representing.

Work in progress

We are currently in the process of producing the virtual rooms before implementing them into the final application. After this, we intend to release the application version of AIDA on Steam as well as upload the 360-version on a platform supporting interactive 360-video.

Reflection

Prior to this project, I had little to no experience of 3D modeling, 360-video production and VR interaction.

Through this project I have realized the sheer complexity of these fields and how they differ from traditional 2D design.

It has given me insight in the concept of immersion in new contexts. So far, we have seen that immersion is

very connected to flow and credibility. The audiovisual effects hugely impact the level of immersion within AIDA as

they can maintain or interrupt the flow of the experience. Seamless transitions between scenes and

utilizing the qualities of 3D sound are key in this regard. With credibility, we mean that the user can be taken out of their deep

engagement with the world if they stop buying what is going on within it - for example if the story does not add

up or if they encounter technical bugs making the distinction between the realities very apparent.

Lastly: rendering time is no joke. This project has made me respect time as a resource on a whole new level.

When rendering times go up to 100 hours for a 40s video clip, the nervs are on edge. When not being able to see your work in a truthful

manner until it is rendered and you then realize that you made a mistake, you kind of want to scratch your eyes out.

This project is a great reminder of the importance of attention to detail, of checking and double-checking

every aspect of your work before hitting that final render button. All in all, AIDA has made me curious about

XR as it is an extremely intriguing field of design, especially in relation to interaction.